I’m trying to capture a large, complex site that has around 450 individual pages. It would make QA-ing easier if I were able to export an index of captured URLs from the WARC - is there any way to do this?

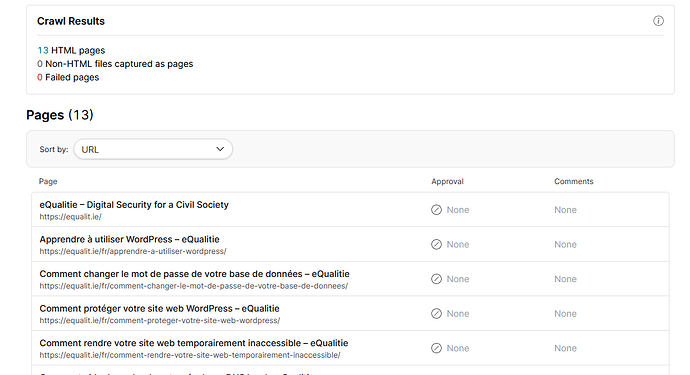

Are you capturing it using Browsertrix? If so, maybe check out our QA tools. A full list of pages available in your archived item can be found on the Quality Assurance tab. ![]()

If you’re not using Browsertrix… Well, it’s a bit more involved.

The lack of a pages index is one of the big gaps of standalone WARC files format and why we developed WACZ! If you create a WACZ file from your WARCs using pywacz using the --detect-pages command line option, it will scan your WARCs for pages and output an index inside the WACZ file which should give you what you want here. WACZs can be unzipped by opening them with any un-archiving utility that supports ZIP files to reveal their component WARCs, along with the index which is saved as a CDX file.

You can load this CDX file in a text editor to get a full list of all the files available in the archive. Searching for the text/html media type will give you an idea of how many HTML files (which will comprise the pages of your site but also things like YouTube embeds) have been captured.

You can also load the full WARC or WACZ in ReplayWeb.page which is a much easier path if you just need to see what is in there because it also allows you to view the actual archived content alongside the page list! Likely easier than opening the CDX alone if you want to manually check each page.

I’ll also note that in writing this, I’ve realized that the ReplayWeb.page web application doesn’t seem to accept CDX files in the upload form, but the desktop app will open them no problem if you right click your CDX file in the file browser and select “open with”! Another ReplayWeb.page quirk is that it currently only loads resources in increments of 100 so you’ll need to scroll down to the bottom of the list to load them all before filtering by Media Type.

That’s the best answer I’ve got! If it seems complicated, that’s because it is… And also why we built a much easier QA workflow into Browsertrix ![]()

Thanks so much for such a detailed reply, Hank!

Rather than manually visually comparing the live & archived sites, I’m hoping to compare the list of captured URLs with the list of URLs that I know comprise the site. I’ve been capturing using ArchiveWeb.page - am I right to think that exporting from AW in WACZ format would therefore allow me to get an indexed CDX file that I could use for this purpose?

Yep! The WACZ file will include the index!

You may have to do some data cleanup / formatting to compare it against your list of URLs in a nice fashion, but the data will be in that file.

Should also mention that there’s a pages.jsonl file in the WACZ’s pages directory that also includes the list of pages with URLs that you can parse with any program that accepts json files. May be an easier way to extract the URLs because it won’t include the embeds / non-“page” HTML files!

This is precisely what I was looking for - thank you so much!